Hello everyone. In this article, let’s go through the buzzwords Docker, Containers, Virtual Machines, and Containerization. First of all, let’s start with Containerization.

Containerization

Containerization is the process of bundling the software code with all its libraries, frameworks, and other dependencies so that it can be packaged and shipped as a single entity.

The software or application within the container can be moved and run consistently in any environment in any infrastructure be it on-premise or cloud. It is independent of that environment or infrastructure’s operating system. The container is basically a fully functional and portable computing environment.

Before containers, when the application is shipped to another platform or OS, there were compatibility issues that resulted in bugs, errors, and glitches that needed fixing (meaning more time, less productivity, and a lot of frustration).

Packaging up an application in a container that can be moved across platforms and infrastructures, that application can be used wherever you move it because it has everything it needs to run successfully within it.

The idea of process isolation has been around for years, but when Docker introduced Docker Engine in 2013, it set a standard for container use with tools that were easy for developers to use, as well as a universal approach for packaging, which then accelerated the adoption of container technology.

Containers vs Virtual Machines

There is always confusion between Containers and Virtual Machines. Let me clear it here.

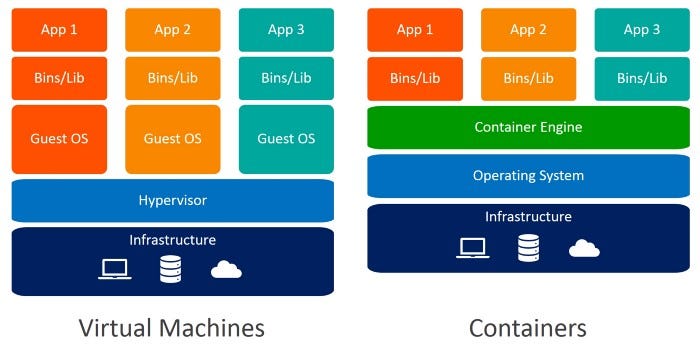

Containers and virtual machines are very similar resource virtualization technologies. Virtualization is the process in which a system’s singular resource like RAM, CPU, Disk, or Networking can be ‘virtualized’ and represented as multiple resources. The key differentiator between containers and virtual machines is that virtual machines virtualize an entire machine down to the hardware layers and containers only virtualize software layers above the operating system level.

As server processing power and capacity increased, bare metal applications weren’t able to exploit the new abundance in resources. That’s when Virtual Machines were born by running software on top of physical servers to emulate a particular hardware system. A hypervisor, or a virtual machine monitor, is software, firmware, or hardware that creates and runs VMs. It’s what sits between the hardware and the virtual machine and is necessary to virtualize the server.

Within each virtual machine runs a unique guest operating system. VMs with different operating systems can run on the same physical server — a UNIX VM can sit alongside a Linux VM, and so on. Each VM has its own binaries, libraries, and applications that it services, and the VM may be many gigabytes in size. An example of this would be the VirtualBox or VMware and I am sure most of us would have installed it on top of Windows OS.

Then came the Containers sitting on top of a physical server and its host OS — for example, Linux or Windows. Each container shares the host OS kernel and, usually, the binaries and libraries, too. Shared components are read-only. Containers are thus exceptionally “light” — they are only megabytes in size and take just seconds to start, versus gigabytes and minutes for a VM.

Containers also reduce management overhead. Because they share a common operating system, only a single operating system needs maintenance. In a nutshell, containers are lighter-weight and more portable than VMs and with the advent of Docker, Containers are very very common now

Docker

With the understanding of Containers and Containerization, let’s move on to Docker. The concept of Docker is “Build Once. Ship Anywhere”

Docker is an open platform for developing, shipping, and running applications. Docker enables you to separate your applications from your infrastructure so you can deliver software quickly. With Docker, you can manage your infrastructure in the same ways you manage your applications. By taking advantage of Docker’s methodologies for shipping, testing, and deploying code quickly, you can significantly reduce the delay between writing code and running it in production.

Docker is one of the container technologies and it is the most famous one. There are other container technologies like

- Podman

- LXD

- Containerd

- Buildah

- BuildKit

- Kaniko

- RunC

In this article, let us focus only on Docker.

Docker is written in the GO programming language and takes advantage of several features of the Linux kernel to deliver its functionality. Docker uses a technology called namespaces to provide the isolated workspace called the container. When you run a container, Docker creates a set of namespaces for that container.These namespaces provide a layer of isolation. Each aspect of a container runs in a separate namespace and its access is limited to that namespace.

Architecture of Docker

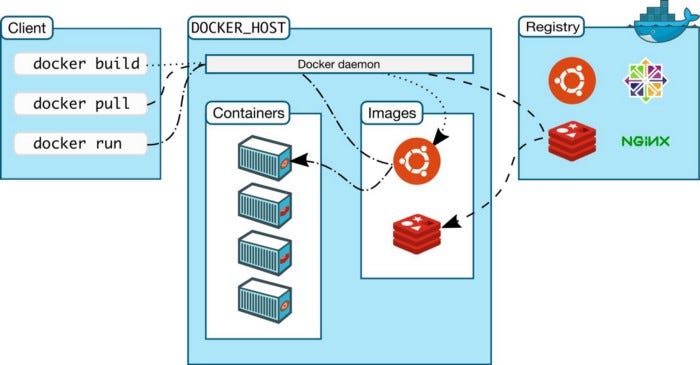

Docker uses a client-server architecture. The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers. The Docker client and daemon can run on the same system, or you can connect a Docker client to a remote Docker daemon. The Docker client and daemon communicate using a REST API, over UNIX sockets or a network interface. Another Docker client is Docker Compose, which lets you work with applications consisting of a set of containers.

The Docker daemon

The Docker daemon (dockerd) listens for Docker API requests and manages Docker objects such as images, containers, networks, and volumes. A daemon can also communicate with other daemons to manage Docker services.

The Docker client

The Docker client (docker) is the primary way that many Docker users interact with Docker. When you use commands such as docker run, the client sends these commands to dockerd, which carries them out. The docker command uses the Docker API. The Docker client can communicate with more than one daemon.

Docker Desktop

Docker Desktop is an easy-to-install application for your Mac or Windows environment that enables you to build and share containerized applications and microservices. Docker Desktop includes the Docker daemon (dockerd), the Docker client (docker), Docker Compose, Docker Content Trust, Kubernetes, and Credential Helper.

Docker registries

A Docker registry stores Docker images. Docker Hub is a public registry that anyone can use, and Docker is configured to look for images on Docker Hub by default. This is very similar to GitHub which hosts the source code. You can even run your own private registry from the cloud like AWS ECR.

When you use the docker pull or docker run commands, the required images are pulled from your configured registry. When you use the docker push command, your image is pushed to your configured registry.

Docker objects

When you use Docker, you are creating and using images, containers, networks, volumes, plugins, and other objects. This section is a brief overview of some of those objects.

Docker Images

An image is a read-only template with instructions for creating a Docker container. Often, an image is based on another image, with some additional customization. For example, you may build an image that is based on the ubuntu image, but installs the Apache web server and your application, as well as the configuration details needed to make your application run.

You might create your own images or you might only use those created by others and published in a registry. To build your own image, you create a Dockerfile with a simple syntax for defining the steps needed to create the image and run it.

Each instruction in a Dockerfile creates a layer in the image. When you change the Dockerfile and rebuild the image, only those layers which have changed are rebuilt. This is part of what makes images so lightweight, small, and fast when compared to other virtualization technologies.

Docker Containers

A container is a runnable instance of an image. You can create, start, stop, move, or delete a container using the Docker API or CLI. You can connect a container to one or more networks, attach storage to it, or even create a new image based on its current state.

By default, a container is relatively well isolated from other containers and its host machine. You can control how isolated a container’s network, storage, or other underlying subsystems are from other containers or from the host machine.

A container is defined by its image as well as any configuration options you provide to it when you create or start it. When a container is removed, any changes to its state that are not stored in persistent storage disappear.

Monitoring the Docker Containers — The Docker Dashboard

The Docker Dashboard gives you a quick view of the containers running on your machine. The Docker Dashboard is available for Mac and Windows. It gives you quick access to container logs, lets you get a shell inside the container, and lets you easily manage the container lifecycle (stop, remove, etc.).

I work as a freelance Architect at Ontoborn, who are experts in putting together a team needed for building your product. This article was originally published on my personal blog.